Classification of Business Activities by Machine Learning: The Case of France.

30 April 2024

Context

- Sirene is the French national company registry

- When a company registers, an activity code is attributed

- Challenges :

- Refactoring of the Sirene information system

- NACE revision to come in 2025

- Teams still overwhelmed

- End of March 2024:

- Officially switch from Sirene 3 to Sirene 4

- Consequences: Ideal moment to innovate (but under the constraint!)

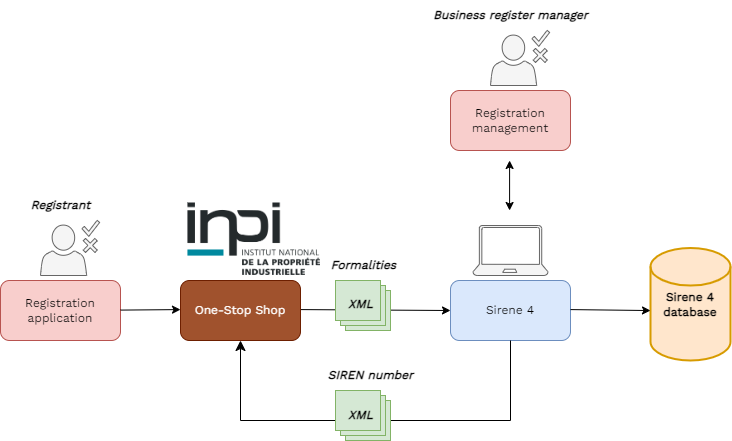

The flow” of formalities

The administrative landscape

- Siren number: company directory identification system

- Principal activity code (APE)

- Classification on a daily basis

- Different administrations

- Different information systems

- Requirements: quick, responsive and flexible to updated instructions

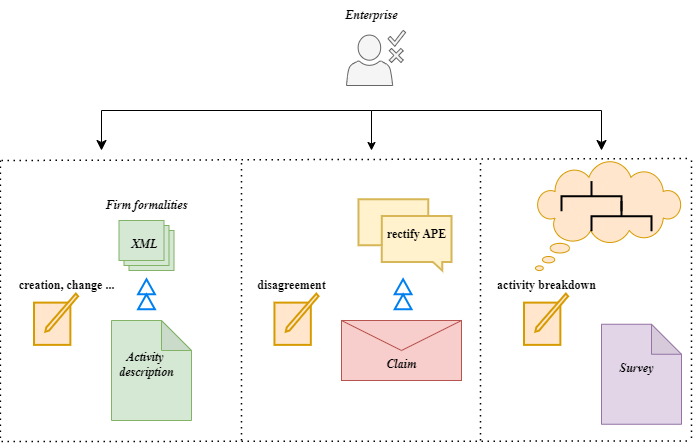

Assign an activy code: different processes

Assign an activy code: two outcomes

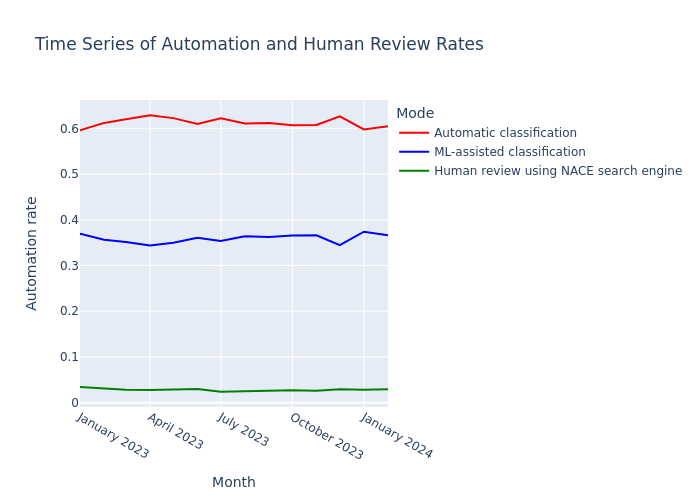

- Automatic coding

- Human review

Near-ubiquity of ML

Automatic activity classification

Human review

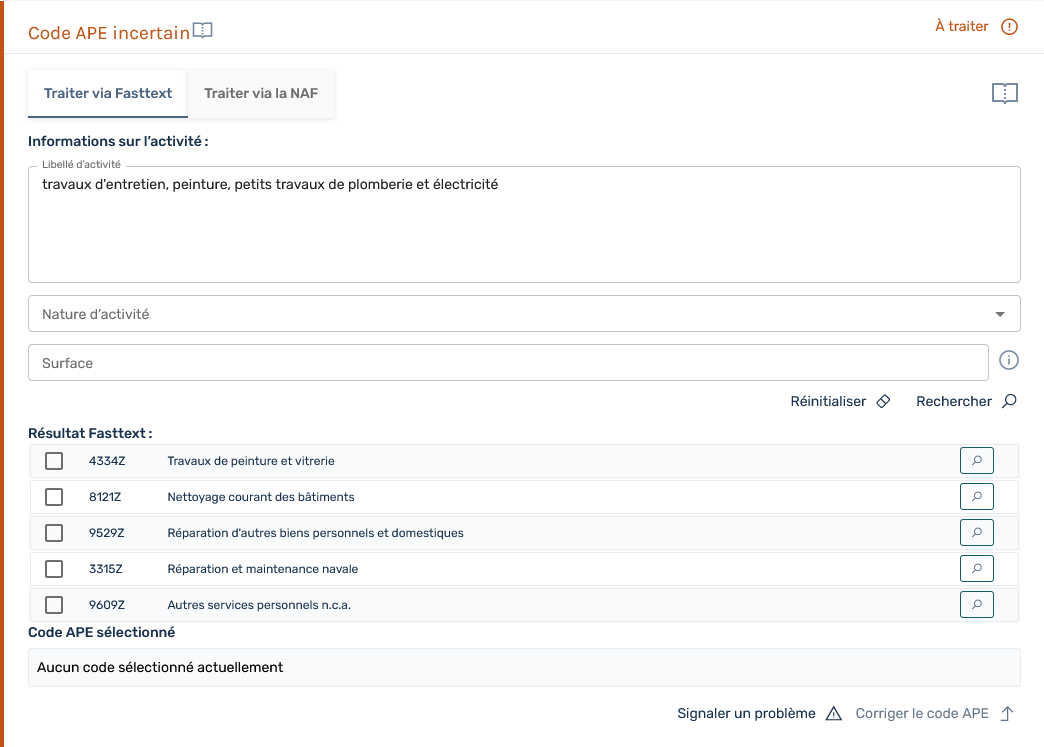

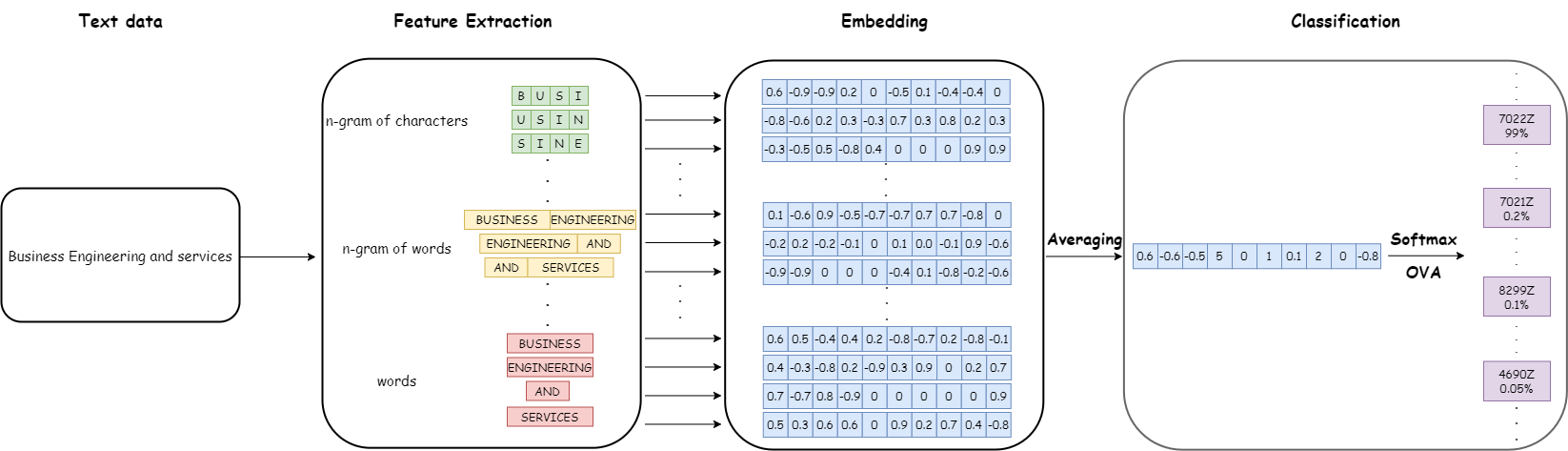

Model

- Text classification model which uses additional categorical variables

- For now we use the fastText library

- Originally trained on legacy data annotated partly by the coding engine and partly manually

FastText

- a rapid and lightweight model

Calendar on new NACE adoption

- 2025: statistical business register adopts new NACE

- Dual Coding

- major coding with NACE rev 2

- minor coding with NACE rev 2.1

- Dual Coding

- 2026: administrative business register adopts new NACE

- Dual Coding

- major coding with NACE rev 2.1

- minor coding with NACE rev 2

- Dual Coding

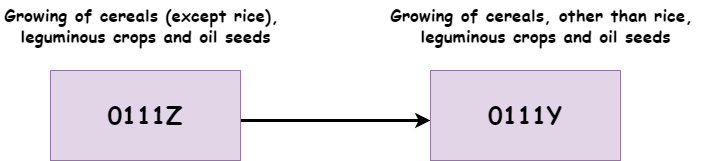

1-to-1 correspondence

- An easy and ideal case

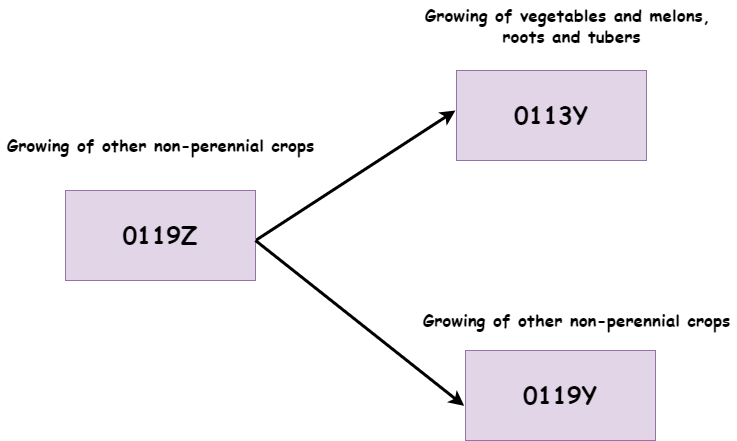

1-to-many correspondence

- An ultimately less desirable solution

- Need expert decision based on activity description

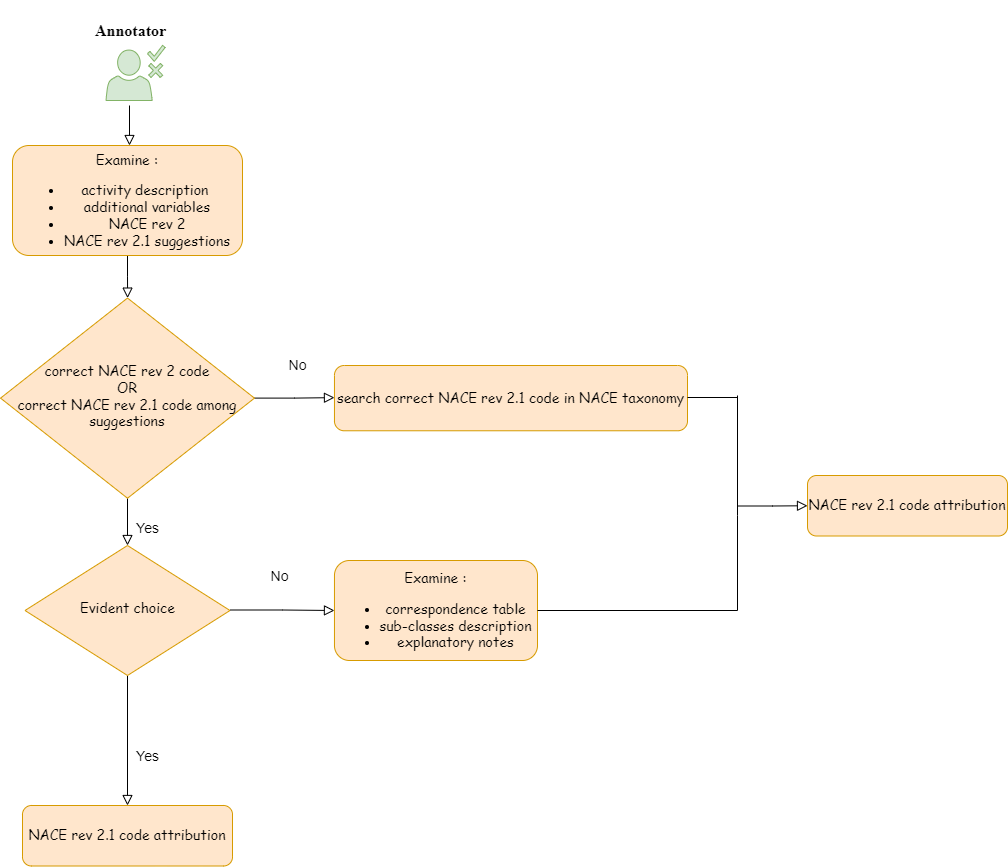

Annotation campaign strategy

- A one-shot operation spanning multiple months in 2024

- manual labeling only on the 1-to-many codes

- select data to annotate since the implementation of the one-stop shop

- kill two birds with one stone

- check NACE rev 2 coding quality on the 1-to-many codes

- attribute a NACE rev 2.1 code

Labeling method

Reduce tasks to annotate

theoretical 1-to-many scenarios can eventually become 1-to-1 in practice

duplicated textual descriptions may occur due to shared practices among registrants.

How to reduce the annotation workload for our annotators ?

- adapt correspondence table by considering real-world business rules

- avoid giving the same textual descriptions to annotate